DoomHUD an adventure in Agentic Coding

Yesterday, I spent a few intense hours building a product from scratch with the help of AI agents. The idea was simple on paper: a macOS HUD that looks like the lower-third of the DOOM UI. The center should show a live webcam feed. On the left: stats for mouse clicks, keystrokes, and context switches. On the right: number of Git commits. Every minute it should take a screenshot — but only if I’m active — and at the end of the day, generate a timelapse video.

The idea for that project came from “some guy on the internet” a lot of years ago. I am sorry, but the name eludes me. I found the idea fun at the time; but his project was very specfic to his needs, and I never got it to run as I wanted it to back then. The project idea has been in the back of my head for years - and I have tried to half-ass that project more than once; but never got passed the initial hurdle of not being a very good developer when it comes to desktop UI. I could create the backing data, get the keystrokes, mouse clicks and even the git commits - but never got around to actually make anything that looked good; only snippets of half-working code for parts of it, before abandoning the idea again.

But; during my tests of agentic workflows the idea of creating a “real product” from scratch using only ai and my knowledge of leading a development team, and a pretty clear vision of what I wanted sounded quite plausible. So I decided to give it another go. I started doing it as a side thing while working on real stuff at my day job; but ended up spending the evening honing the project and getting to “good enough that I could use it”. And it is actually running while I am writing this.

I kicked off the session with a prompt to Claude:

“Help me create a PRD for a product that is a sort of heads-up display… like the lower-third DOOM UI… [with] webcam in the center, activity metrics on the sides, commit count, screenshots, timelapse video… written in Swift and for macOS only…”

I let Claude ask clarifying questions for about 10–20 minutes. The result was a crystal-clear Product Requirements Document (PRD) that covered everything from layout dimensions to permission handling, UI design to file structure.

Once we finalized the PRD, I asked Claude to generate a full implementation plan. It responded with a detailed 8-phase roadmap, outlining 77 individual coding tasks ranging from window setup, event monitoring, webcam capture, to timelapse generation . Each task was time-bounded and clearly scoped, which made the actual build flow incredibly smooth.

Some highlights from the implementation plan:

- Phase 1: Create HUDWindow using NSWindow, always on top at 800×120px.

- Phase 2: Use CGEvent and NSEvent taps for mouse/keyboard input; hook into NSWorkspace for app/context shifts.

- Phase 3: Use AVFoundation for the camera feed and motion detection.

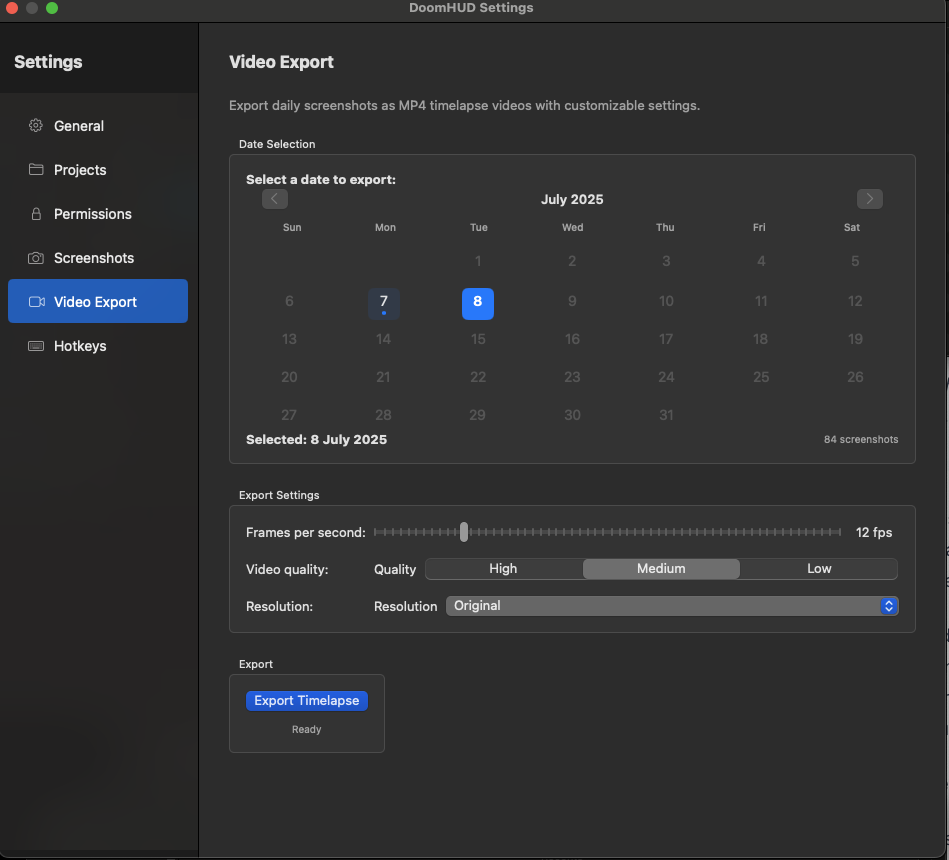

- Phase 5: Screenshot every 60 seconds using CGWindowListCreateImage.

- Phase 6: Assemble screenshots into MP4s with AVAssetWriter.

- Phase 7: Register hotkeys for pause, reset, export, and quit.

- Phase 8: Real-time database persistence, error handling, and performance polishing.

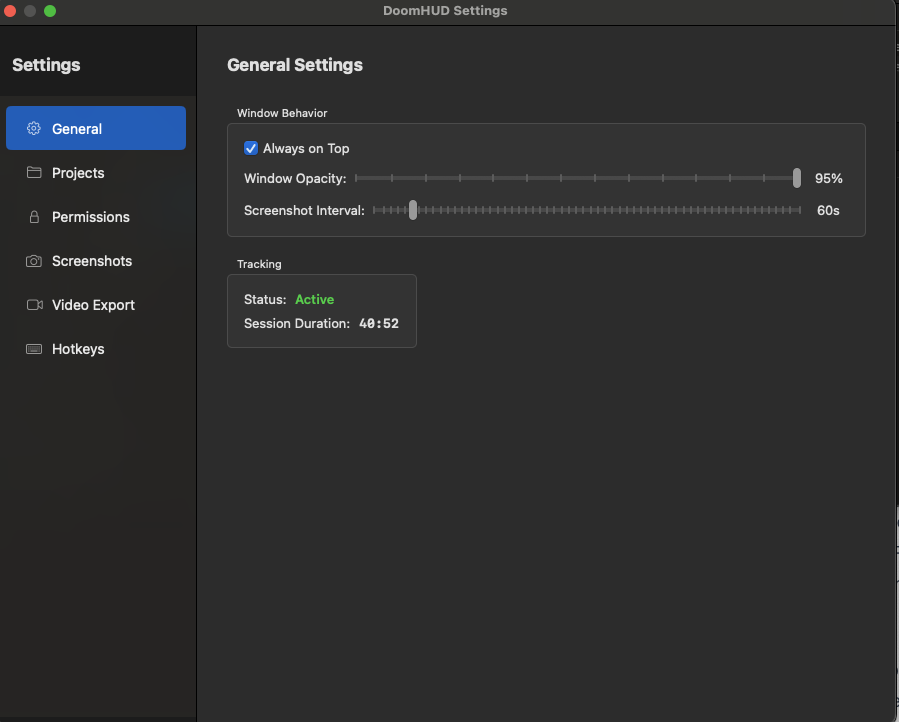

Everything went quite smooth actually; there were no real roadblocks; until we go to testing the “real” application (instead of the development build). Here the pains of MacOS permissions started. For a long time claude iterated on the same solution, adding and removing the same lines of code multiple times, removing unrelated parts of the code “for testing purposes” and not really finding any real solutions to the problem.

The project needs permissions to do input tracking; and Claude repeatedly generated solutions that installed CGEvent taps too late in the application lifecycle. The taps failed silently. No permissions dialog appeared, no crash, just… nothing. And I had no idea of what was wrong myself. Trying to guide someone in things I don’t know anything about is hard. So I decided to let ChatGPT be our friend in need, and it dropped a simple but vital insight:

“If you launch the tap too late, the system may ignore the access request, resulting in no dialog… Install the tap as early as possible (e.g., applicationDidFinishLaunching).”

That was the exact symptom we had. With this additional context, claude correctly placed the tap early and included fallback logic to alert users if the app wasn’t listed under System Settings → Privacy & Security → Input Monitoring. That unblocked the entire input metrics system.

Another issue surfaced in the hotkey system: occasional crashes when assigning callbacks.

Claude couldn’t detect it, but I found the error messages in Console.app. Once I gave it the exact log trace, it quickly identified the root cause—a retain cycle causing a deallocation crash—and patched it with a proper weak reference capture list. The key for success in these types of projects is contextual reinforcement; AI gets exponentially better when you share logs, failures, and edge-case constraints.

Cue: screenshots.

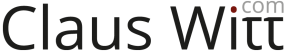

The main window:

The general settings

The permissions page

The video export page

The project is available on github; with the initial PRD and development plan; there are probably some hardcoded paths, and a bundle identifier, you would want to change if you want to use this. But all in all, I think it works as I want it to.

Oh, except that I want it to be always on top; and without the annoying MacOS window-chrome… but for the love of whatevers; I cannot get claude to fix it for me - so I will probably need to look into this myself… if I get around to it. (PR’s welcome though; if you - or your agents - can fix that for me!)